Hi guys, I’ve been trying to apply Cache dependencies feature from GItlab CI documentation in a project of mine that runs platformio ci command for each version pushed to the repo. This project uses 3-5 external libraries and the job has been taking longer and longer since more libs are added.

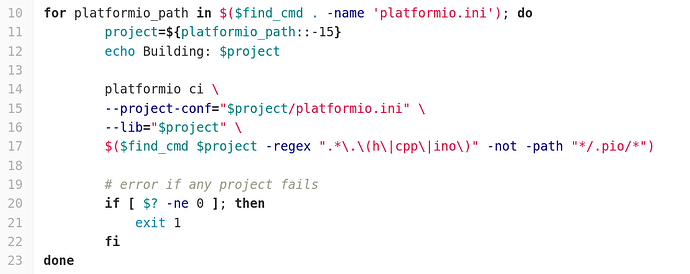

That is the script for platformio ci:

The .gitlab-ci.yml file is like so:

platformio:

stage: lint

image: registry.xxxxxxxxxxxxxxxxxxxxxx

cache:

key: "$CI_JOB_NAME-$CI_COMMIT_REF_SLUG"

paths:

- ISensor/.pio/build/

script: ./platformio-ci.sh

I think I’m doing everything properly but somehow the time each job has been taken is not decreasing.

The reason I’ve chosen the path above was because the Building, Compiling, Indexing & etc seems to happen over there:

Compiling .pio/build/uno/src/main.cpp.o

Compiling .pio/build/uno/lib22b/OneWire_ID1/OneWire.cpp.o

Archiving .pio/build/uno/lib22b/libOneWire_ID1.a

Indexing .pio/build/uno/lib22b/libOneWire_ID1.a

...

Compiling .pio/build/uno/src/main.cpp.o

Compiling .pio/build/uno/lib22b/OneWire_ID1/OneWire.cpp.o

Archiving .pio/build/uno/lib22b/libOneWire_ID1.a

Indexing .pio/build/uno/lib22b/libOneWire_ID1.a

...

| |-- <Wire> 1.0

Building in release mode

Compiling .pio/build/teensy36/src/main.cpp.o

Archiving .pio/build/teensy36/lib21a/libISensor.a

Indexing .pio/build/teensy36/lib21a/libISensor.a

Compiling .pio/build/teensy36/lib68f/Wire/Wire.c

Platformio is running in Docker container, and I’m not sure where platformio ci is been installing the libs used in the project.

At the end of each job I’ve got this:

Creating cache platformio-cache-ci...

WARNING: ISensor/.pio/build/: no matching files

Archive is up to date!

Created cache

Job succeeded

no matching files?

Created cache?

What does that mean?

I also realize the following at the beginning of each job:

Successfully extracted cache

Authenticating with credentials from /var/lib/gitlab-runner/.docker/config.json 09:24

9 minutes for authentication? Seriously?

Also, apparently there is some cache been used. But this path seems to be from the container where AFAIK is not possible to cache data since it will be lost in the nex build.

LibraryManager: Installing id=1

Using cache: /root/.platformio/.cache/16/7848ce2817f213c50c23dafb9c6eaf16

Unpacking...

OneWire @ 2.3.5 has been successfully installed!

LibraryManager: Installing id=54

Using cache: /root/.platformio/.cache/70/6e77374ce8b723995884c1935d2c7670

I’m kinda newbie, so I really appreciate your time! If I was not that clear, please let me know.

Best wishes.